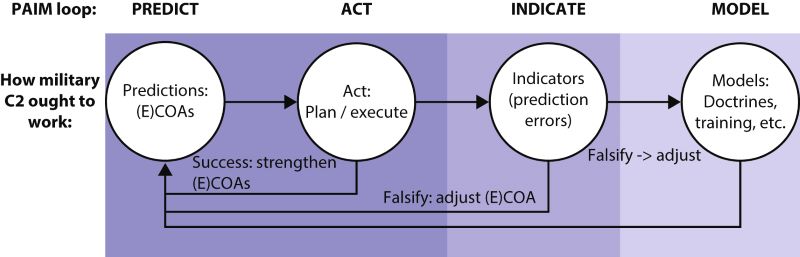

Our current understanding of decision-making is largely based on the Observe-Orient-Decide-Act (OODA) loop. A reinterpretation of the OODA loop through the lens of neuroscience, however, produces a new model called the PAIM loop (Predict, Act, Indicate and Model). The PAIM-loop not only allows faster thinking and acting, it also simply reflects how the human brain works. Instead of starting from observations, PAIM starts from predictions, which directly enable a person to act. These predictions rely on models, like military doctrines and training. Moreover, by actively searching for prediction errors through indicators, it becomes possible to adapt and learn more thoroughly than when using the OODA loop. A better understanding of how the brain works leads to a deeper understanding of why humans act the way they do and how they can do things better.

New technology is not always required to defeat an opponent. More often, victory is achieved by being able to think and act faster than the enemy. Although this is easier said than done, this article will argue that the answer lies in the for the military unlikely field of neuroscience. The current understanding of decision-making is largely based on the Observe-Orient-Decide-Act (OODA)-loop, a model designed by John Boyd. This article will argue that reinterpreting the OODA loop through the lens of some recent discoveries in neuroscience, it will become clear how the human brain has evolved in such a way that it can think and act faster than the OODA loop prescribes. Moreover, since we ‘are our brain’ one could argue that no escape is possible from the consequences of how the brain makes sense of the world and ‘decides’ how to act. On the one hand this article will explain why armed forces already do some of the things they do and on the other hand it will force them to re-evaluate some of their concepts about decision-making.

Recent discoveries in neuroscience show that the human brain has evolved in such a way that it can think and act faster than the OODA loop prescribes. Photo Remco Mulder

This article starts with a brief and simplified explanation of several neuroscientific theories. Next, these theories will be used to reinterpret and enhance the OODA loop in order to think and act faster than the enemy. The article will propose some possible solutions for applying this theory into practice in the planning and execution of military operations. Finally, the article will argue that this theory can provide the missing piece of the puzzle that is currently called ‘information-driven operations’. At the end, by looking at military decision-making through the lens of neuroscience, this article hopes to have demonstrated how it is possible to think and act differently.

Lessons from neuroscience

Until recently, there were not many theories that explain how information is processed and created in the brain and still much remains unknown. This article will primarily rely on recent work of four scientists.[1] These scholars – Jeff Hawkins, Andy Clark, Anil Seth, and Lisa Feldman Barrett – describe how the brain acquires new input and processes and creates new knowledge, yet each with a different focus, emphasis and terminology. This article will only concentrate on key ideas that – in the opinion of the author – are relevant to military decision-making.

The brain continuously makes predictions

The human brain constructs the world through predictions. What someone perceives to be reality is actually a complex synthesis of sensory information and expectation.[2] Some scientists consider prediction the primary function and therefore the fundamental activity of the brain.[3] A thought experiment – as quoted from Barrett – illustrates what is meant by predictions: ‘Keep your eyes open and imagine a red apple. If you are like most people, you will have no problem conjuring some ghostly image of a round, red object in your mind’s eye. You see this image because neurons in your visual cortex have changed their firing pattern to simulate an apple. If you were in the fruit section of the supermarket right now, these same firing neurons would be a visual prediction. Your past experience in that context (a supermarket aisle) leads your brain to predict that you would see an apple, rather than a red ball or the red nose of a clown. Once the prediction is confirmed by an actual apple, the prediction has, in effect, explained the visual sensations as being an apple’.[4]

Prediction is a necessary tool for the brain. If the brain was merely reactive, it would be too inefficient to keep someone alive. For example, the amount of visual data that one single retina of the eye can transmit equals that of a fully loaded computer network and would immediately bog down the brain. This also explains why humans do not remember events as snapshots, because memories in fact are simulations or ‘controlled hallucinations’.[5] The wiring of the brain confirms this. One would expect that most connections go from the eyes towards the brain regions that process vision, yet the opposite is true. There are about ten times as many connections going back from the visual cortex (i.e. the part of the brain where vision is likely being constructed) towards other brain regions and the eyes.[6] Humans predict what they will likely see, and their observations mostly serve to minimize prediction errors.[7] Perception is therefore primarily a continuous process of prediction-error minimization. The subjective experience of seeing an object is determined by the content of the top-down prediction and not by the bottom-up sensory signals.[8]

Without predictions humans would not be able to play games like baseball. One might intuitively think seeing an incoming ball, and then decide to raise a hand in order to catch it; but this is not what happens when someone has some experience in catching a ball. The brain has no time to calculate the trajectory of the ball and the required position of the hand. This can only be achieved through experience (i.e. practice). This is why soldiers are trained extensively in handling equipment or performing drills. What normally would be called ‘instinct’ is actually the brain being very adept in making predictions, as in how to change a gun’s magazine without thinking. To make such predictions, the brain first needs to construct models.

Without predictions generated by the brain humans would not be able to play games like baseball. Photo U.S. Air Force, Michelle Gigante

How the brain constructs models

Jeff Hawkins has found evidence that neurons and cells are clustered in so-called cortical columns. The exact workings of cortical columns are not relevant here, but what is important to know is that every column in the neocortex has cells that create reference frames.[9] Simply explained they contain cells that tell a person what is located where, which is the function of a reference frame.[10] Every column has multiple models of complete objects and can predict what should be observed or felt when seeing or touching an object at each and every location of that object.

Imagine being somewhere in a town, but the exact location is unknown. If you see a fountain your brain will start recollecting what places in this particular town have a similar fountain. You then remember where you have seen this fountain before and identify the town and grid on a map, for example grid D3 (columns indicated in letters and rows in numbers). If you are not sure in which town you are, you might want to start moving and see or predict what you will see when moving South to grid E3. If the prediction of being in town X where there should be a school proves wrong, your brain can eliminate the possibility of being in that town and go on to investigate the likelihood of being in town Y. This is what people who get lost would likely literally do: start moving until they recognize where they are.[11]

In the above example it might seem as looking at one map each time the brain makes a comparison. In the neocortex, however, the neurons are able to search through thousands of maps (i.e. models) simultaneously. This is why humans never experience going through a list of possible options in their heads. Someone who goes to work every day, doesn’t have to compare all the faces of his colleagues in a list of possible faces and corresponding names, but instantly recognizes them. However, encountering someone in the workplace that doesn’t belong there, like a friend from high school, would trigger the brain to double-check if the image is correct and make the person feel a surge as if something is wrong. This is because the situation doesn’t fit any reference frames. Therefore, Hawkins concludes that all knowledge in the brain is stored in reference frames relative to locations, be they actual locations or concepts that have an abstract location.

Reference frames are used to model everything humans know. The brain does this by associating sensory input with locations in reference frames. First of all, a reference frame allows the brain to learn the structure of something. This is important because everything in the real world is composed of a set of features and surfaces relative to each other. Hawkins gives the example of a face, wherein a face is only that because a nose, eyes and mouth are arranged in relative positions. Second, once the brain has been able to learn an object by a reference frame, it can be manipulated in the brain, for example what an object would look like from another point of view or angle. The brain does not compare exact pictures of faces with other pictures in order to verify if it is again looking at a face; it has learnt a model of what a face consists of. Third, a reference frame is needed to plan and create movements. As Hawkins explains: ‘Say my finger is touching the front of my phone and I want to press the power button at the top. If my brain knows the current location of my finger and the location of the power button, then it can predict the movement needed to get my finger from its current location to the desired new one. A reference frame relative to the phone is needed to make this prediction.’[12] Reference frames are used to model everything a person knows and are not limited to physical objects. Humans also have a reference frame for abstract concepts such as history, democracy or righteousness.[13]

One would intuitively think that there is only one model for every object in the world. Hawkins’ thousand brains-theory flips this assumption over and says that there are a thousand models for every object. There is no platonic ideal object of which there exists only one in the mind; in its place are a thousand models for possibly one simple object. When someone for example imagines a bench and many different images pop up in the mind, picking one of them to function as a prototype bench model will be required. These are just superficial models that only depict the major outline of the image of a bench. Note that all the subelements of a bench also consist of models of their own.

But how does the brain select the right model? Hawkins assumes that the brain ‘votes.’[14] When it compares different models, it votes which reference frames are closest to what is being observed.[15] Reason would say that the voting resembles what is called corroboration or falsification. Corroboration consists of all the evidence that strengthens a model and will have a say in the vote. Every model that was falsified by the observation will be left out of the vote. This explanation resembles prediction-error minimization.

The human brain continuously makes predictions about ourselves and the world around us. It is able to do this because of a continuous construction of models, which rely on reference frames that are chosen by way of ‘voting’ or prediction-error minimization.

How to apply this theory to military decision-making

When armies prepare, be it for a war against another state or counterinsurgency operations, they almost always need to work with a large factor of uncertainty. Armed forces are hardly ever able to predict exactly where, how and against whom or what the battle will take place. This is why doctrines have been created and numerous handbooks exist for tactical activities and procedures that facilitate a unity of understanding about what troops ought to do. These are essentially models built on reference frames. Armed forces often have only one or two chances during a fight, which is too little and too costly to build a decent reference frame. This is why it is important to train extensively. It also explains why military history is immensely important for upgrading reference frames with experiences from previous battles or exercises.

General Heinz Guderian visits Sedan after the German breakthrough in May 1940: the French were left completely confused at the entire front, because what they observed did not match their predictions. Photo Bundesarchiv

There are also very interesting analogies at the level of command and control. Military planners use the concept of Course of Action (COA) for planning activities of their own troops. The intelligence branch likewise uses the model of an Enemy Course of Action (ECOA) to describe their predictions of what activities the enemy will possibly undertake. COAs are predictions pur sang, which consist of models, like the attack or defence. Even the own COA is usually nothing more than a prediction. This may sound counter-intuitive, but military often falsely believe to be in full control of their own COA. They believe to have more accurate predictions, more influence on their own troops, a better reference frame of what they are capable of and they are convinced they can more easily correct prediction errors. An example is when one-up[16] predicts that the reserve should be deployed or delegated to a specific subunit. Pushing logistical supplies (instead of pull) is also about having accurate models and making predictions. All military planners know the proverb that no plan survives crossing the line of departure, hence it remains nothing more than a prediction.

The analogy is even more striking in the concept of mission command and intent. Subordinate commanders are expected to display initiative and creativity while simultaneously understanding the effects the commander wants to achieve and why, without the commander being able to give that specific guidance at a given moment: the subordinate commanders should be able to predict what the commander would intend. Being able to do this not only requires an unambiguous and clear intent, but also extensive training and familiarity among commanders. Figuratively, you need to peek inside each other’s brains and especially the models these are equipped with.

Explaining the French failures of May 1940

To make this theory more explicit, an historical example concerning the rapid advance of the Germans in May 1940 in Operation Fall Gelb in Belgium and France can be considered. The French were completely overwhelmed by the rapid advance of German tanks and slow to react, especially in the vicinity of Sedan. The French lacked speed and adaptability because of three deficiencies in their command and control (C2). First and most importantly, their reference frames and consequently the models that determined their predictions were very narrow and limited. In the minds of the French, tanks usually did not go faster than the infantry, let alone without them. French tanks were also technically limited to a speed of about 25 kilometres per hour, much slower than their German counterparts. Moreover, the French assumed that the Germans would neutralize all enemy resistance before advancing, instead of simply bypassing strongpoints as they did. This left the French at the entire front completely confused, because what they observed did not match their predictions. Their models were too far off the mark. The Germans were thus able to achieve surprise within the conceptual domain, with huge effects in both the physical and morale domains.

The second deficiency in the French C2 was the lack of prediction-error minimization. Observations of German advances were simply ignored or reported as being false, since they disagreed with the predictions of the French higher command. The French did not recognize these observations as being prediction errors and consequently did not make adaptations to their plans or models. This example perfectly shows how it is possible to disrupt the enemy’s decision-making process. Third and finally, an often noted failure of the French was the extremely centralized C2, completely unlike the mission command (Auftragstaktik) of the Germans. The human brain has evolved in exactly the opposite direction of what a centralized command like that of the French entails. The brain circumvents the flow of information up the chain for decision-making and instead pushes predictions down the line in order to act quickly.

Reinterpreting the OODA loop

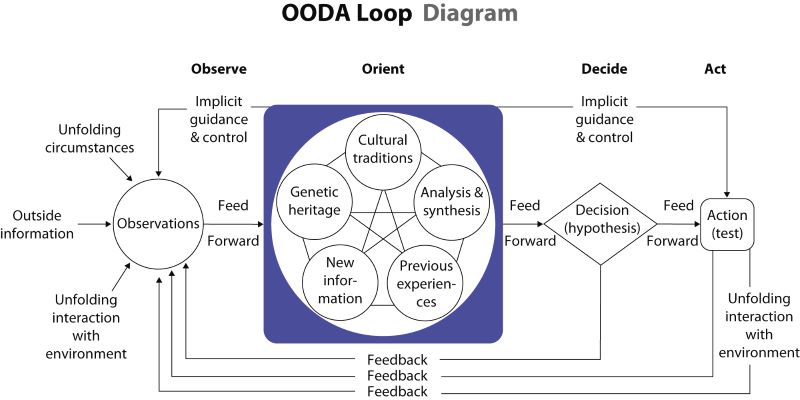

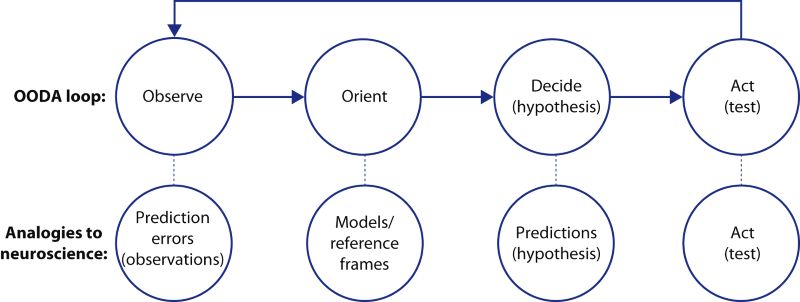

The historic example of the rapid German armoured advance shows that neuroscientific theories can shed a new light on how armed forces currently interpret and apply the OODA loop (figure 1). The OODA loop starts with an Observation, which is then contextualized in the Orient phase through a mix of existing factors, like a person’s previous experiences. This information will then serve as a basis for making Decisions which will result in Actions. It is a loop because new actions will lead to new observations.

Figure 1 John Boyd’s OODA loop[17]

There is a strong resemblance between the OODA loop and how the brain makes sense of the world. Boyd already stressed the centrality of orientation, stating that it is essential to have ‘a repertoire of orientation patterns’ and ‘the ability to select the correct one.’[18] One can reinterpret this as references frames, models and the voting mechanism. Figure 2 displays the analogies between the OODA loop and neuroscience.

Figure 2 Analogies between the OODA loop and how the brain makes sense of the world in order to act

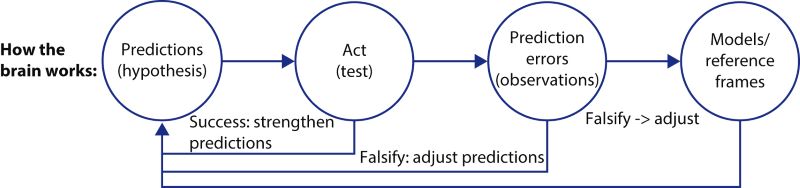

For the brevity of the argument this article focusses on the main difference between the OODA loop and the way the brain works. The main difference is that the sequence of the ‘steps’ the brain takes are different and that not all steps are always required (see figure 3). The loop can ideally be as short as this: predictions -> act. Of course these predictions rely on models, which somewhere down the line were observed or acquired, hence that the model is a self-reinforcing loop. Remember that the brain has evolved in such a way that it does not first need to observe (everything). Instead, humans sometimes act without fully observing their environment, being only sensitive to prediction errors. This is why people can navigate through their houses in the dark, why they hear the next song in a playlist even before it starts, or why they blindly and hopelessly keep reaching for that towel in the bathroom of which the hook has recently been moved just a few centimetres. When nothing seems to be wrong with one’s predictions, these predictions and the models they rely on will only be strengthened. Only when someone observes an anomaly will he change his current prediction and falsify the models that it relies on. This will literally be a new point of reference in a person’s reference frame.

Figure 3 How the brain works and in what sequence

When planning operations at the tactical level armed forces already develop multiple (E)COAs and assign indicators to them in order to strengthen or falsify which (E)COA is most likely to actually happen. This is why military intelligence designates Named Areas of Interest (NAI) and adds specific indicators to them of possible enemy avenues of approach, like what it means when spotting an enemy armoured vehicle-launched bridge. If armed forces really want to think and act faster than the enemy, they ought to adhere to this principle of creating (E)COAs as in predictions and control them by clear indicators. The good thing is that it already does this, but unfortunately only in the planning phase of operations and almost only where it concerns the enemy.

Figure 4 The PAIM loop: how military Command and Control (C2) ought to work

As figure 4 shows this existing model of (E)COAs and indicators resembles exactly how the brain works (figure 3). This model is called the PAIM loop: Predict, Act, Indicate and Model. Again, it doesn’t start with observations but with predictions. Making accurate predictions that likely lead to success allows the creation of a much smaller loop that consists only of the first two steps: (E)COAs -> Plan/Execute. This is where armed forces can think and act faster than the enemy, because they don’t have to go through the entire OODA loop. It does require good and accurate models, attained by either extensive training or knowledge about the enemy or environment. Another advantage of this model is that it incorporates adapting and learning more thoroughly than the OODA loop. The second part of the loop forces one to actively look for and minimize prediction errors. Winning wars is often not about being correct, but about being less wrong than the enemy. Moreover, military always claim to ‘learn’ from their ‘mistakes’ and strive to be adaptive, yet often fail to operationalize and apply that within current operations. Creating clear indicators for prediction errors will force a person to actively look for mistakes.

The model described above has some interesting analogies with Gary A. Klein’s Recognition Primed Decision (RPD) Model. RPD identified that decision-making can be accelerated when decision-makers use their experience and avoid painstaking deliberations. Decision-makers usually recognize an acceptable course of action as the first one they consider, while simultaneously focussing solely on the most satisfactory instead of the best one. However, RPD still requires a person to first observe, orient and subsequently decide and act, albeit more quickly. RPD for example does not take into account that a person’s models determine what he observes in the first place. Moreover, RPD is posited as an alternative next to more analytical strategies, whereas this article argues that the human brain has evolved to perform only one strategy and that it is perhaps less a choice than it is presumed to be. Perhaps the neuroscientific theories referred to in this article, as well as the proposed application in decision-making, can help explain why RPD proves to be so effective.[19], [20]

Moving from theory to practice

One should strive to command a military operation – in the planning as well as the execution phase – with good and multiple (E)COAs with clear indicators. This means that during operations the commander will ideally provide the subunits with continuous updates of the evolving (E)COAs and their indicators. Indicators are not necessarily always about the enemy; they can also function as control or coordination measures. A phase or coordination line can be viewed as an indicator of the position or speed of one’s own units. Units can also report their own predictions one-up. Predictions on a lower level (e.g. company) tend to be more short-term and local, yet often more precise, than on a higher level (e.g. corps). The higher level may not have the best understanding of the local situation, but probably has a better overview since it will receive indicators from multiple sources like other subunits, intelligence assets or one-up.

Many current battlefield management systems offer the possibility of sharing new overlays on maps with units in real time. This article proposes that the commander and staff should focus on pushing updates on (E)COAs and indicators up and down the line by using overlays with tactical symbols in a dashed line, meaning that the activity or object is anticipated and not yet confirmed. Armed forces already have a feel for this where it concerns the (E)COA, but less where it concerns their own troops. Normally soldiers tend to watch the blue force tracker[21] to see where their own troops are located, yet this does not tell what a unit predicts to achieve in the upcoming hours. A subunit can for example project their estimated advance on the map, making it easier to predict where in the operation problems or opportunities will occur. This is not only relevant for one-up, but also for adjoining units that might risk their flanks becoming exposed. In practice, the intelligence and operations branch will need to project their predicted (E)COA on the map regularly, or communicate them by radio, add clear indicators and use them to change (E)COAs continuously.

There is a false belief that technologies like Artificial Intelligence will solve the armed forces’s cognitive information overload. Photo MCD, Frank van Beek

The advantage of sharing new or updated (E)COAs instead of simply reporting and giving new orders is that they directly answer the all-important question ‘So what?’. Control on units should be loosened and they should be supplied only with that what they need: solutions to their problems, like ‘Where is the enemy and what is his intent?’ or ‘Do we have enough resources to achieve our mission?’ To conclude, the (initial) commanders intent should guide the operation, and only indicators (instead of tasks) should be passed along in order to support the unit in achieving its objective. This is the essence of mission command and intent. Commanders and their staff should command by prediction, and control by indicators.

Indicator-driven operations?

A final lesson to be learnt from this theory is that the current ambitions concerning information-driven operations are missing a crucial element. The problem with the current concept is that it does not answer ‘which’ information is necessary at what moment. Soldiers don’t want better, faster or more information; they want possible solutions to their problems. There is a false belief that technologies like Artificial Intelligence will solve the armed forces’ cognitive information overload. Until it is possible to upgrade the cognitive capacities of a soldier’s brain, it needs to be taken into account that there are biological limitations to how much information troops can take in, especially under stressful circumstances. After all, the human brain does not drench itself in large amounts of information, but actively predicts what information it expects to encounter and whether any corrections to these predictions need to be made. Therefore, it is likely that the answer lies in having only the relevant information that strengthens or falsifies a prediction, which is essentially an indicator. Indicators linked to (E)COAs need to drive the operation.

Conclusion

In looking at military decision-making from a different angle the proposed reinterpretation of the OODA loop designated as the PAIM loop (Predict, Act, Indicate and Model) can lead to an advantage over the enemy. It will enable armed forces to think and act faster because once having accurate predictions that lead to success, it will only need predictions in order to act, and nothing more. Furthermore, this model also incorporates adapting and learning more thoroughly than the OODA loop. There is still much to be said about the possible consequences of these neuroscientific theories on military decision-making, but let this be a start. Understanding how the brain works leads to a better understanding of why the armed forces operate as they currently do and how this can be improved. Because one thing is clear: we still command and fight armies that consist of humans.

Remco Mulder regularly publishes about military arts in connection with science on his Substack blog at Beyond the Art of War.

[1] The four publications are: Jeff Hawkins, A Thousand Brains. A New Theory of Intelligence (New York, Basic Books, 2021); Andy Clark, The Experience Machine. How Our Minds Predict and Shape Reality (New York, Pantheon Books, 2023); Anil Seth, Being You. A New Science of Consciousness (London, Faber & Faber, 2021); Lisa Feldman Barrett, How Emotions Are Made. The Secret Life of the Brain (London:,Pan Books, 2018).

[2] Clark, The Experience Machine, xiii.

[3] Feldman Barret, How Emotions Are Made, 59.

[4] Idem, 59-60.

[5] Terminology of Anil Seth.

[6] The same is probably also valid for other sensory organs.

[7] Feldman Barett, How Emotions are Made, 59-60.

[8] Seth, Being You, 82-84.

[9] These cells are so-called ‘place’ and ‘grid’ cells and were discovered earlier by other scientists, yet only in the older parts of the brain, not the neocortex.

[10] Hawkins, A Thousand Brains, 66.

[11] Idem, 63.

[12] Idem, 50.

[13] Idem, 76, 80-81.

[14] Anil Seth proposes a similar theory with a different explanation called ‘prediction error minimization’.

[15] How this exactly works is still part of ongoing research.

[16] The command level directly above one’s own rank.

[17] ‘The OODA Loop Explained: The real story about the ultimate model for decision-making in competitive environments’, see: www.oodaloop.com; Illustration from: www.slidebazaar.com.

[18] Frans Osinga, Science, Strategy and War. The Strategic Theory of John Boyd (New York, Routledge, 2007) p. 236.

[19] Karol Ross, Gary Klein, Peter Thunholm et al, ‘The Recognition-Primed Decision Model’, Military Review, July-August 2004, pp. 6-10.

[20] Gary Klein, Judith Orasanu, Roberta Calderwood et al, Decision Making in Action. Models and Methods (New Jersey, Ablex Publishing Corporation, 1993) chapter 6.

[21] Every unit or vehicle’s actual position is displayed on the map by means of GPS.